What is LLM Optimization?

LLM Optimization is the process of structuring your website’s content, metadata, and entities so that Large Language Models (LLMs) — such as OpenAI’s GPT, Google Gemini, Anthropic Claude, and Mistral — can easily understand, interpret, and retrieve information from it.

The goal of LLM Optimization is not only to improve visibility in AI-driven search experiences like Google’s AI Overviews or Bing Copilot, but also to make your brand’s content more referenceable when LLMs generate answers, summaries, or recommendations.

In short, LLM Optimization helps ensure your information is machine-interpretable, factually stable, and contextually rich enough to be used confidently by AI models.

How Does LLM Optimization Work?

Large Language Models use vector embeddings, semantic relationships, and knowledge graphs to understand and reproduce human language. When you optimize for LLMs, you’re essentially aligning your content with these data systems.

Here’s how LLM Optimization works step-by-step:

- Content Ingestion: AI crawlers or retrieval systems read your content, metadata, and structured data.

- Semantic Embedding: Text is converted into numerical vectors that represent meaning and relationships.

- Contextual Ranking: The LLM evaluates relevance, clarity, and factual grounding based on surrounding entities.

- Generation and Attribution: When a user asks a question, the model retrieves relevant embeddings — potentially citing or paraphrasing your content in its output.

Optimizing for this process means presenting information in formats and contexts that LLMs can easily parse and trust.

Why Is LLM Optimization Important?

Traditional SEO focused on keyword alignment and backlinks. In contrast, LLM Optimization focuses on data clarity, factual accuracy, and entity consistency — the elements that determine how AI models learn, summarize, and represent information.

1. Visibility in AI-Powered Search

LLMs increasingly power search results, summaries, and recommendation engines. Without optimization, your brand risks being omitted from generative outputs.

2. Mitigating Hallucinations

When AI models cannot find structured or verified data, they generate (“hallucinate”) false statements. Optimizing ensures your information fills those gaps accurately.

3. Entity-Level Authority

LLMs think in entities, not keywords. Proper entity definition and schema markup increase your likelihood of being retrieved for related prompts.

4. Future-Proofing SEO

As AI systems evolve, structured and semantic clarity will determine whether your brand remains discoverable in AI-driven ecosystems.

How to Optimize for LLMs

1. Use Clear, Factual Language

Write unambiguous sentences and avoid excessive marketing language. LLMs favor explicit facts and definitions that can be verified.

2. Add Schema Markup and Structured Data

Include Organization, Person, FAQPage, Product, and HowTo schemas. JSON-LD markup provides the machine-readable clarity that LLMs depend on.

3. Reinforce Entity Connections

Connect your content to recognized databases such as Wikidata, Google’s Knowledge Graph, and authoritative external sites. Consistency across platforms builds trust.

4. Include Source Citations

LLMs prioritize sources with clear attribution. Cite statistics, studies, or references directly in your text, making it easy for retrieval systems to associate credibility.

5. Maintain Content Freshness

Regularly update your content. Many LLMs use retrieval-augmented generation (RAG) systems that pull recent data from indexed sources.

6. Publish in Crawlable, Text-Based Formats

Avoid critical information being locked in images or scripts. Use HTML-first presentation with descriptive alt text and semantic structure.

7. Strengthen Author and Brand Signals

Build E-E-A-T consistency: show author credentials, verified social profiles, and detailed organization pages linked through schema.

LLM Optimization vs Traditional SEO

| Feature | Traditional SEO | LLM Optimization |

|---|---|---|

| Goal | Rank higher on SERPs | Be referenced or retrieved by AI systems |

| Core Focus | Keywords, backlinks | Entities, semantics, factual clarity |

| Data Type | Unstructured text | Structured + semantic data |

| Crawlers | Search engine bots | AI and embedding models |

| Primary Output | SERP listings | AI-generated summaries and citations |

Technical Foundations of LLM Optimization

- Vector Embeddings: Represent your content’s meaning numerically for AI retrieval systems.

- Retrieval-Augmented Generation (RAG): LLMs pull real-time data to improve factual reliability.

- Knowledge Graph Alignment: Ensures your brand and entities are recognized in AI knowledge bases.

- Citation Mapping: Strengthens how models connect facts to original sources.

- Content Consistency: Reduces ambiguity and improves embedding accuracy across multiple domains.

Tools and Techniques

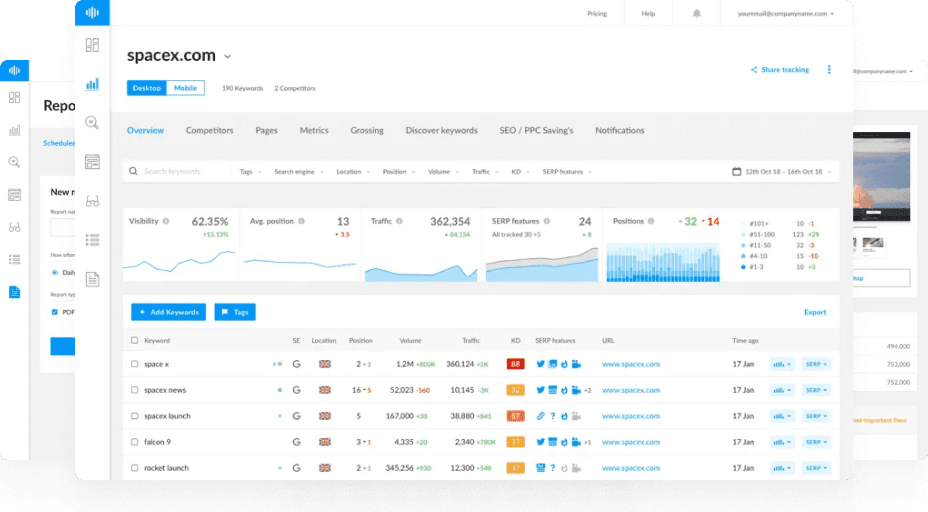

- Ranktracker Web Audit: Identify schema gaps and entity mismatches.

- Keyword Finder: Discover semantically related keywords for context-rich optimization.

- AI Article Writer: Produce structured, factual content suitable for both SEO and AI engines.

- SERP Checker: Monitor AI-augmented search features and their visibility impact.

The Future of LLM Optimization

As LLMs become the foundation for most digital interfaces — from search to virtual assistants — optimizing for them will be as critical as traditional SEO once was.

Expect to see:

- LLM visibility analytics (tracking mentions and citations).

- AI trust scoring for web entities.

- Cross-model optimization where one content strategy serves multiple AI ecosystems.

Summary

LLM Optimization is the bridge between SEO and AI. It ensures that your content is structured, factual, and contextual enough to be retrieved, cited, and trusted by large language models.

As AI becomes the main interface for discovery, mastering LLM Optimization will determine who gets referenced — and who disappears from the conversation.